I should preface this by saying I don’t know if we’re in an AI bubble or not. On the one hand, a lot of cheap and easy funding has enabled more AI businesses to flourish than the market can likely sustain. On the other, the fact that so many are talking about this bubble with such certainty kind of precludes it from happening (bubbles tend to burst before we realise we’re in one). But there’s certainly a race, and every race has its losers. Including those making bets.

If you’re an organiser making every effort to enrich your proposition to the markets you serve, then AI – and more specifically Large Language Models (LLMs) – present a means of doing so without imperilling your core offering; the exhibition itself. AI improves engagement for the business communities you serve, allows you to do more with the data you harvest and (with a good systems architect in place) it does so impossibly efficiently.

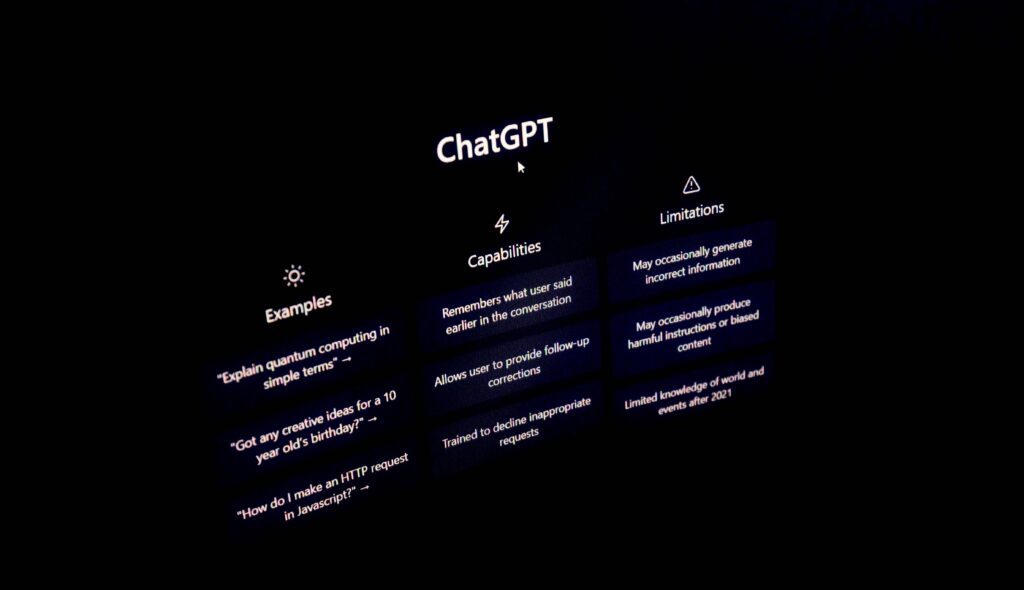

An LLM, by the way, is a deep-learning model trained on massive text data to understand and generate human-like language for exhibition-friendly tasks like chatbots, translation and writing.

But given all the talk of the AI bubble bursting, how do you know which unproven platform to integrate into your cherished organisation?

The answer, for the exhibition industry at least, may lie in smaller open-source options.

Eyes bigger than our stomachs

Most of the AI demands in our industry are pretty modest. At the show level, organisers wish to use AI models to improve productivity and enrich the interface for attendees, which means increasing our efficiency at routing customer requests, ranking search results and so on. Price per task matters, and so does speed. Every millisecond is crucial for the end user.

Or in the words of Adrian Lepers of open-source AI platform Hugging Face, speaking yesterday on the BBC Radio 4 programme Artificial Human1:

“Using these big LLMs is like using a Formula 1 car to go grocery shopping. Actually, most real world tasks that you see in companies are very narrow and specialised. That’s where the advantage of smaller and specialised models becomes really clear.”

With these big general-purpose models there’s a price for every request, no matter the complexity, because you’re firing up an enormous proprietary system to accomplish the task. Diversified companies such as these are able to offset that cost against existing revenue streams, but there’s a significant cost nonetheless.

However, smaller open-source models are faster and cheaper to use and, from the end-user perspective, often capable of running on existing infrastructure and hardware.

Good news for an industry that still uses Microsoft Excel a lot more than it cares to mention.

OpenAI is not open source

If open source is the future, then why is OpenAI in trouble?

Well it may surprise you to know that OpenAI’s flagship models (GPT-4o and DALL-E 3) are proprietary and closed-source. The point stands though; projections suggest the company’s losses could reach US $14 billion or more in 2026, and with computing costs skyrocketing, it’s fair to say CEO Sam Altman will be visiting investors and power providers for most of it. Record-breaking revenue growth, yes, but not without eye-wateringly massive financial losses.

But whether it’s proprietary (ChatGPT), or open-source (DeepSeek) remember the old adage: if you can use a product for free, then you’re the product

This lack of encumbrance is the reason we’ve seen such a remarkable challenge to the Magnificent Six (Amazon, Meta, Microsoft, Alphabet, Apple and Oracle) from open source models such as China’s DeepSeek, owned by Hangzhou-based hedge-fund High Flyer, and Qwen, owned by Alibaba’s cloud computing subsidiary; Alibaba Cloud. They get very close to the performance of the large proprietary LLM models, for free.

And price is an issue because …

The price today will not be the price tomorrow

If and when the bubble bursts and the AI landscape undergoes its subsequent consolidation, the end user will have to bear the costs. We all saw this post-COVID with home delivery businesses and airline mergers; service prices rose as competition reduced.

You can be sure the landscape will look very different when the initial gold rush is over. But open-source AI models are more resilient to this type of market shake-up, because they aren’t some prodigious proprietary system weighing heavily on an organisation’s bottom line.

Closed-source APIs (OpenAI or Anthropic) are also vulnerable to performance drops, like the ‘IQ-drop’ experienced by Claude in late 20252.

Open source AI means that exhibition organisers should be asking themselves today where else they can use artificial intelligence, and do so with the confidence they can likely afford it tomorrow.

Moving quickly, and away from proprietary architecture where hugely leveraged businesses try to own everything, may grant you the competitive advantage you seek.

References:

1. Artificial Human (2026), Episode 3, BBC Radio 4 https://www.bbc.co.uk/programmes/m001wjf8

2. The Guardian, June (2025), Advanced AI suffers ‘complete accuracy collapse’ in face of …https://www.theguardian.com/technology/2025/jun/09/apple-artificial-intelligence-ai-study-collapse

Leave a Reply